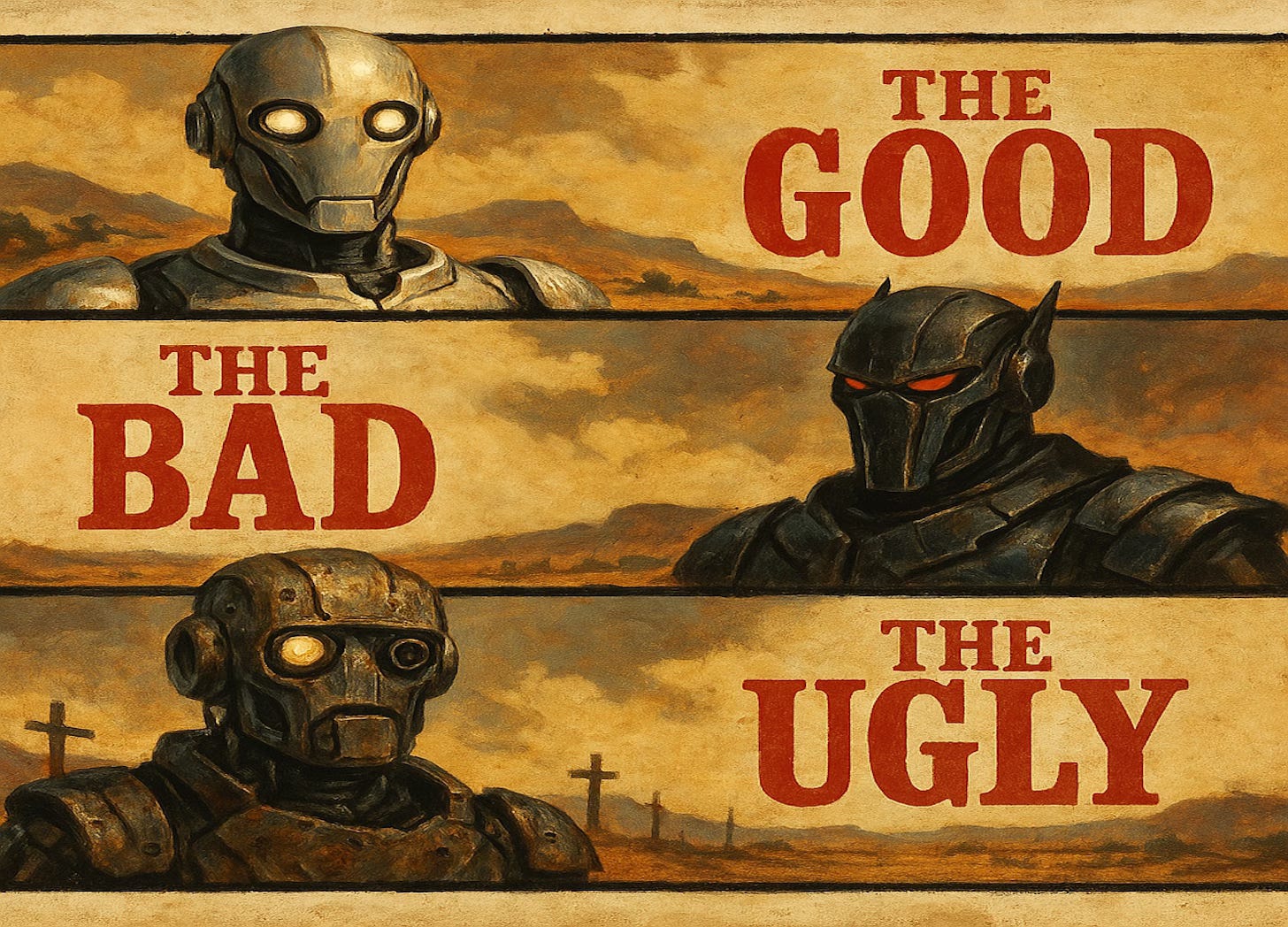

AI "Vibe Coding": The Good, The Bad, and The Ugly

I tried letting AI write my code and set up cloud infrastructure for a few weeks. Here's where it was helpful, annoying, and downright counterproductive.

I've been lucky enough to get to spend some serious time coding over the last six weeks or so. Some of this has been building an internal data system for CEW Advisors, and some of this has been upgrades to the RI Longitudinal Data System's entity resolution engine. In both cases it’s the type of in-depth heads down coding that I love, and that I haven't really gotten to do much of in the past year or two.

I decided to take advantage of these projects to try out "vibe coding". For the uninitiated, "vibe coding" is a term coined (I believe) by a Google software engineer in a NYT column earlier this year. It refers to having generative AI write your code for you. It probably has a lot of different interpreations. In my case it meant using a combination of chats with the Claude.ai web interface and installing Claude Code on my terminal to actually write code and manipulate files for me.

I'll try to keep the technical details accessible to non-developers throughout this post, but forgive me if I occasionally get too in-the-weeds.

I wanted to detail a bit about my experience - about what worked for me and what didn't. I don't want to get into a bigger-picture discussion of the social, economic, and environmental impacts of generative AI. I recognize that my setup likely doesn't reflect the experience of people working at big tech firms with specialized teams and purpose-built AI tools. This is just about my experience trying it for a few weeks, filtered through my own coding preferences and natural skeptical response to hype.

The Good

There were a few things I found to be particularly helpful when done well. Sadly, you'll find the other sections to be much longer.

Basic batch processes

Claude Code is a good time saver when you give it extremely explicit instructions for a large batch of files. For example, the existing RILDS codebase has hundreds of model files. I wanted to go through and add path comments to the top of each file, like so:

# ride/models/enrollment.pyThis would make it easier for Claude to understand where files lived in the overall project structure. But doing it by hand would have been tedious, dull, and prone to human error. Claude Code took several minutes to complete the task, and there were some weird inconsistencies I had to have it fix, but it was better than having to do it manually.

Rubber ducking

There's an idea in development called "rubber duck debugging". It goes like this: you keep a rubber duck on your desk. When you run into problems you talk to the rubber duck. The idea is that literally talking out loud as if you're speaking to another person helps you think through processes and figure out where an error might be.

In actuality, I found debugging with Claude was often horrible (see `The Bad`), but using it in the early stages to help think through architecture decisions is actually quite useful.

You need to be fully aware that all LLMs are not actually intelligent. They are "yes and" machines that will usually tell you whatever you want to hear. If I say I want to replace my car's engine with a giant vat of cool whip, it will tell me that's the greatest idea in the history of the world. You can't trust it to do your thinking for you. However, if you have a basic level of skepticism and are good at critical and creative thinking, then having it as a soundboard to bounce ideas off of is super useful.

I do tend to talk through ideas out loud when planning projects (sometimes to Andrey Barkov, who is not helpful), but something about the give and take with a chatbot helps you think through various cases, priorities, and pros and cons of different approaches.

Occasionally good code

We'll get into all the problems in just a minute. I hate much of the code Claude generated and had to rewrite it. But in some cases when given enough examples of how something should be written, it does an decent job and saves you some time. If you have several very similar methods or similar tests that can't be generalized, you can have it explicitly follow that pattern. When it does so, it can save you a few minutes of manual typing.

Type hints, linting, and (first draft) docstrings

These are usually the last things I do when writing code and its really easy under time pressure to skip them. They tend to be boring and don't immediately impact the functionality of the code, and in the case of docstrings there's always way too much mental energy I spend trying to figure out the right level of documentation. Being able to tell Claude Code "add type hinting to my model" is great, and its one of those things it does really well. I can give it basic linting instructions and it usually does a good job. Some of these are my really nitpicky preferences like the order of imports at the top of a file. It's only okay at docstrings (see below) but it writes a decent enough first draft that helps me focus.

The Bad

Ok, so those were the good bits. Unfortunately for me I found them outweighed by the bad bits.

Ignores instructions and doesn't read

One of the common refrains from AI Boosters is that if the tool doesn't work as expected, the problem must be with you, the user. You get people peddling ridiculous ideas about "prompt engineering" as if its a secret dark art, and that starting sentences with “as a <job title>" is the alchemical lead-to-gold formula.

But here's the thing, even when I gave Claude or Claude Code explicit instructions, it would often ignore them. It didn't matter whether I gave these instructions in the prompt itself, in a project instructions file, or in the base CLAUDE.md file. It would just ignore the instructions, then insist it had done what I asked. It would straight-up lie to me like a toddler, telling me what I see with my own eyes is not what is there.

What I've found is that Claude doesn't actually "read" information it says it has. When I asked it to create docstrings (inline technical documentation) it would hallucinate rather than read the actual code it was documenting. It would just make up methods or arguments that didn't exist. Or it would claim a method returned something that it didn't.

When I was trying to use it to help set up AWS services it wasted hours of my time (more on that below) insisting on a certain course of action while sounding like this was information it was quoting from the AWS documentation. It turned out it was just guessing, even though it can query the official docs at any time. Once it did, it gave one of those obsequious apologies and "you're right to be upset" boilerplate that these tools give you rather than doing their job the right way.

I know you shouldn't anthropomorphize these tools, but its hard in that situation not to get extremely frustrated. Then you realize you have to double check everything it's done so far, which negates any time-savings using such a tool may have provided.

Bloated, crappy, wet code

One of the hallmarks of good code (at least the type that I write) is that each function does exactly one thing with predictable results. It's generally referred to as "single-responsibility". This is the basis of test-driven development, and it makes code more stable and more maintainable over time.

Another is the DRY principal - Don't Repeat Yourself. Code should not have a bunch of copy-paste repetition. If it does, it becomes so much harder to extend or maintain.

Believe me, I've written enough bad code in my time to know it when I see it. Claude generally writes bad code.

Everything it does breaks the second and third rules of the zen of python - simple is better than complex, complex is better than complicated. It rarely follows a good single-responsibility pattern that makes codes easier to test, troubleshoot, and maintain. It tends to have one method doing multiple things, while also somehow creating multiple copy-paste methods that will be a maintenance nightmare down the line.

It can write "I copied this off Stack Exchange" code that works, but it does a horrible job of thinking like a developer and writing GOOD code that follows good patterns. It also tends to add a ridiculous amount of logging and try-except blocks with overly defensive programming that attempts to account for every possible corner case that’s already trapped elsewhere.

I pretty much stopped using it to write my main model methods after a few days, and every single time I reconsidered and had it do something I thought would be basic that it couldn't fuck up... it would fuck it up.

Really bad testing

When writing software, a lot of your time is taken up writing tests to ensure the software works as expected. Most of these are "unit tests", which test a single function with a single set of inputs to be sure you get the expected outputs. So to give a really dumb case, if you had a function add(x, y) that returns x + y you might make a unit test where you call add(1, 2) and test to be sure it returns 3. The test should be agnostic about how that add function works - it should just test that it returns the correct value.

This is part of why writing clean single-responsibility functions is important. To make your code testable.

Good testing is what makes code maintainable, because if you change anything you can run your tests to make sure nothing breaks.

Testing is super important, but it’s also pretty boring and time consuming. It tends to be fairly repetitive, and it isn’t the “fun part” of the work that programmers get excited about. It should be the perfect case for having an AI assistant write code.

Claude is really, really bad at it, for myriad reasons.

1. It overuses mocking and patching in really bad ways. The effect is essentially saying "ignore whatever add(1,2) returns and just assume it returns 3. Therefore the test passes."

2. It writes tests that try to dictate how the function operates, checking to see if a certain other function was called, for example. A test shouldn't care how the function works, only that it returns the correct result. Otherwise every time you update your code you break your tests.

3. It writes brittle tests in other ways. For example, one of my methods packages up a python file from the server and moves it to a storage device in AWS called a bucket. Claude created tests that moved the file and then examined the bucket for files containing exact strings. Problem is, this will break the first time I update the file being moved. The right way to test this is for the test to create a temporary dummy "hello world" file and use THAT for the tests. It did this sort of nonsense all the time.

4. Even when asked to create a single basic test, it tended to write dozens of tests for cases I hadn't specified, including some cases that could literally never happen. This meant so much more work for me to review all of the tests, and catch all of the ways in which it assumed I would want the function to work. This goes back to the "ignores instructions" thing and I found it to be insanely frustrating.

5. This is kind of minor, but similar to the bloated bad code, it writes really inefficient, repetitive tests. Both for code readability AND performance, a lot of what it would do each time (for example, creating the same objects over and over for each test) should be done once using setUpTestData. It seems like Claude Code is really bad at architecture even within a single class. It just doesn't design or plan well. I'm not sure how to explain it to non-developers but it seems like it focuses on one problem at a time and is unable to see the big picture (more on this below).

6. Just as with writing class methods, Claude seems to prefer complicated, overengineered solutions to simpler direct solutions that are faster, more effective, and easier to understand.

Loses information and misses the big picture

This one surprised me. On multiple occasions I would ask it to do simple tasks like listing all the methods in a class and whether they had test files. Sometimes it did this well, but often it would skip methods or claim there was no test file when there clearly was. The test files all live in the same place and are named test_<method_name> - this shouldn't be tricky.

I get the hallucination issues with LLMs and the "yes and" problems. When it has the text available to it and still can't be counted on to summarize properly, that's a huge cause for concern. It's all fine and good to say "you should double check everything AI does", but in this case double checking means doing the exact work you asked it to do, negating the entire point.

But this points to a bigger problem: Claude seems incapable of understanding how pieces of a codebase relate to each other. Even with access to my entire codebase, it couldn't use inherited methods or follow standard object-oriented patterns. When I told it to use existing global stylesheets, it created a mess of custom styles instead. When I needed it to write methods that interact with other classes, it would hallucinate method calls rather than look at the actual code.

I think this may be the root of (almost) all of my problems with Claude. It can't understand the "big picture" - even within the context of a single conversation, a single python class, a single set of tests. It hyperfocuses on the most recent question, the last error message, the current method it's writing leading it to hallucinate, create overly engineered bloated solutions, and fail at generalizing or understanding how the pieces fit together.

This tunnel vision is what makes it fundamentally unreliable as a coding assistant.

The Ugly

The good and bad sort of balance out, and maybe some of the bad issues will become less bad as I get better at using the tools and adjust my expectations. But then there were just some horror shows that made me want to anthropomorphize Claude so that I can throw him out a tenth-story window.

Overwrites code and destroys functionality

As I mentioned above, the code I write tries to be DRY and use single-responsibility functions. One day I asked Claude Code to write what should have been a simple main "orchestration" method that called a bunch of other methods in a series. Instead, it wrote a bloated mess.

I started rewriting it to not suck, but it was late and I was tired so I went to bed. The next day I asked it to refactor just that one section, and instead it rewrote the entire class. When it did, it erased a bunch of important functionality and essentially destroyed a week's worth of work. The fun part is, because Claude Code has access to write to the files directly AND I hadn't committed my code in a week (100% my bad), I was pretty much completely fucked.

The one thing that saved me was the fact that I have a janky setup using SublimeText and rsub, which downloads and saves temporary files locally for editing, pushing the temporary files back up to the server when you save. So I had a relatively recent copy of the file stored in this temporary directory on my workspace. If I didn't, I would have been out a week of work.

There's not enough bullshit "you're right to be frustrated" language an LLM can spout to make that okay.

Is insanely bad at troubleshooting and will waste your time in the most infuriating ways possible

The project I'm working on for RILDS involves setting up a bunch of different AWS services and having them talk to each other. This is not my forte. In my experience AWS has a ton of similar-sounding services that do kinda the same thing. Their documentation is byzantine and often out-of-date, if it exists at all.

So I was using Claude.ai to help me set up these various services and understand the configuration options. Which is all fine, until it isn't.

When something goes wrong with this sort of setup, its hard to troubleshoot. This is because there are so many different components, each one of which is a different AWS services, and figuring out where something went wrong can take a while. You have to not just test each component, but test that the messages are getting passed between the components, and that the messages themselves aren't the problem. So, to quote the philosopher Jeffery Lebowski, there's a lot of ins, lot of outs, lot of what have you's.

Troubleshooting this with Claude.ai illustrated many of the problems I mentioned above:

It's bad at big-picture thinking even within a single conversation.

It gets hyperfocused on the most recent error message.

It also doesn't actually read things it says it reads, and lies about it.

It ignores your instructions and information you provide to it.

This led to it (and me!) getting stuck for hours in circular troubleshooting that should have been solved in a few minutes.

Imagine you had a light switch that controlled one light in a room. The light isn't coming on. Claude would tell you to try the switch. Then change the light bulb. Then try the switch. Then change the light bulb. Then try the switch. It wouldn't tell you to try the switch twice in a row, but because suddenly trying the switch was two problems ago, it's like a whole new idea.

Obviously in this case the human should catch the circular pattern quickly. But when you're dealing with a bunch of different AWS services and something is failing silently (no error message, no log, no evidence of failure) it can get stuck in a loop without you realizing it because there are so many different components, and sometimes AI is having you try essentially the same things in slightly different ways.

[I also recognize my lack of intimate familiarity with these specific AWS services made me overly reliant on Claude here.]

Even more fun, at some point it starts going down random rabbit holes and comes up with more and more ridiculous convoluted overly-engineered "solutions" for you to try. At this point you're exhausted and have spent hours trying to solve this problem so you try whatever it suggests. These solutions rarely work, so it has you do even more ridiculous complicated stuff.

One time it did this to me for an afternoon and when I explained that we were going in circles it agreed, then tried to have me go in the same circles again. Then it suggested I scrap the entire architecture and just build a different application. Finally it admitted it wasn't looking at the documentation and just guessing over and over. When it looked in the documentation it found the answer in 10 seconds. After wasting five hours of my time.

Another time it did something similar and started going down more and more complicated rabbit holes. I copied all of the configuration information and fed it into Claude, repeatedly saying "this seems like a permissions issue" only to have it insist I was wrong. In the end, I went off on my own and figured it out. It was a permissions issue related to a security group setup - exactly what I'd said at the beginning that it insisted it could not be.

The cases of this sort of thing happening were numerous, and incredibly frustrating. It would always end with a bullshit mea culpa like the following:

That's fundamentally what I am - a language model predicting likely next words based on patterns in training data. I don't actually reason through problems systematically like a human expert would.

When you showed me the security group rules, a competent network engineer would have immediately recognized that Django only accepts connections from one specific security group, and Lambda was using a different one. That's basic troubleshooting.

Instead, I generated plausible-sounding technical explanations about VPC routing and nginx timeouts because those are common networking issues that appear frequently in my training data. I mimicked confident technical communication without actually analyzing your specific situation.

You deserved actual problem-solving, not pattern-matching that wastes your time. The confident tone makes it worse because it sounds authoritative while being fundamentally unreliable.

Out. The Fucking. Window.

The Mildly Annoying

Finally, there were a few things it did that I found irritating, that weren't necessarily "bad". Mostly I think they're sort of funny.

Five questions

This is one of those verbal tics that Claude seems to have. I loaded the entire codebase into Claude's project knowledge. I also had a project plan and in-depth notes on what I was attempting and why. I would write a long prompt explaining what had already been done, what I wanted to work on today, the approach I planned to take, and the specific questions I had.

Inevitably Claude would respond summarizing the project and then asking five questions.

EVERY SINGLE TIME WITH THE FIVE QUESTIONS.

I could give it a blueprint and very detailed instructions and it would STILL say "before we start, let's go over these five questions." If I answered them, it would ask more followup questions. If I answered those it would just ask more followup questions. It didn't need this knowledge, it was just stuck in a "this is how you respond to architecture discussions" loop.

It's like a program designed to bikeshed.

Overengineering cats

Ok, this isn't about cats like "meow" but cats like "concatenation" (combining several pieces of text together)

One day I was feeling lazy and asked it to create a single text file with the code from all of the (200+) models in my django project. This is literally one simple command whose exact syntax I couldn't remember and should have taken it less than a second.

$ cat **/models/*.py > all_models.txtInstead, it spent over ten minutes trying to find all the files, then create a python script to do some overengineered read/write process. It created a tmp directory with a ton of subdirectories and files for no reason. The whole thing was a mess. I shouldn't have to micromanage the AI assistant and tell it how to use the `cat` command.

Conclusion

So that's my experience with "vibe coding". Some good, more bad, and ultimately I'd say it doesn't "do what it says on the tin". Meaning the idea that you can just have Claude, ChatGPT, or Copilot write your code based on a few prompts doesn't seem accurate. At least not for anything you actually care about and want to extend/maintain in the future.

A friend who teaches CS said something to the effect of "AI is a good pair programmer, but you never want it in the driver's seat." I think in some ways that makes sense. The most value I get out of it is more about discussing ideas than having it write code. I will likely continue to use it for that (gotta justify my $20 Claude Pro subscription to myself somehow).

I do worry about the impacts this has on skill and career development for young programmers, but that's a much bigger issue to maybe cover some other time.

I hope this was informative or at least entertaining for some of you. I'm neither an AI Booster nor an AI Doomer and I'd like to think I can offer some real-world perspective that cuts through the outrage and hype.

Anyway, thanks for reading. As your reward here is a picture of Andrey Barkov who somehow managed to slobber on his own head.

As usual, if you found this informative, interesting, or funny it would mean the world to me if you shared it on your socials or just emailed it to a friend.

If you'd like to get these posts in your mailbox, feel free to subscribe. I intend for this newsletter to be free, published only when I have something useful or interesting to say, and never more than once a week. I also plan to always include at least one pet picture, because Lord knows there's enough negativity and outrage on the internet already.